Preview Deployments with Traefik, Docker Compose and GitLab CI

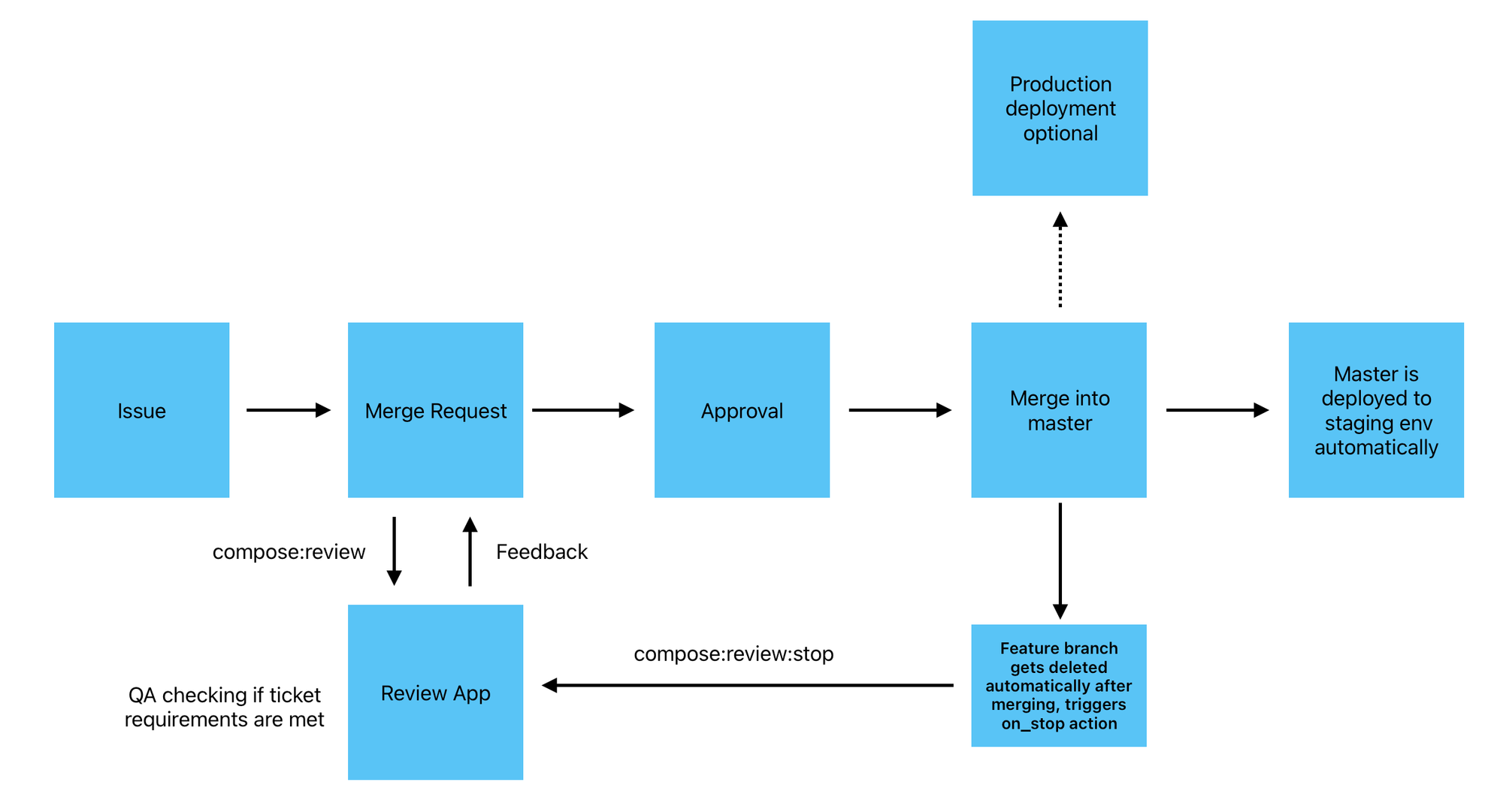

In the fast-paced world of web app development, staying ahead of the curve is crucial. One of the most effective ways to ensure your web app is always at its best is by using preview deployments (sometimes called review apps). This technique has been made popular by static website hosting services such as Netlify or Vercel. But what exactly are preview deployments, and how can they benefit your development process? Let’s dive in!

What are preview deployments?

Preview deployments are temporary, live environments that are automatically created for each pull request in your code repository. They allow developers, testers, and stakeholders to see and interact with the latest changes in a real-world setting before they are merged into the main branch. Think of them as a sneak peek into the future of your app, providing a sandbox where you can play, test, and refine.

Benefits of preview deployments

- Early Detection of Issues: By testing changes in an isolated environment, you can catch bugs and issues early in the development cycle, reducing the risk of them making it into production.

- Improved Collaboration: Preview deployments provide a tangible way for non-technical stakeholders to review and provide feedback on new features, ensuring everyone is on the same page.

- Faster Development Cycles: With automated creation and deployment, preview deployments streamline the testing process, allowing for quicker iterations and faster time-to-market.

- Enhanced Quality Assurance: Continuous testing in a preview environment ensures that each change is thoroughly vetted, leading to a more stable and reliable final product.

A Practical Example: Creating preview deployments with Traefik, Docker Compose, and GitLab CI

Let’s look at a practical example to see how preview deployments can be implemented. Imagine a project with the following services located in a monolithic repository (a.k.a. monorepo): app, cms, api, dbcms (database for CMS), and dbapi (database for API). Each service is a separate project within our repo. Here you can see the folder structure:

/my-project

├── /app # our application

├── /api # backend of our application

├── /cms # user-friendly interface to manage app content

├── /compose # compose configurations for all our environments

│ ├── docker-compose.yml

│ ├── docker-compose.staging.yml

│ ├── docker-compose.production.yml

│ └── docker-compose.review.yml

├── /scripts # utility scripts called from the CI jobs (see below)

│ ├── copy-volumes.sh

│ └── remove_review_containers.sh

├── /traefik # configuration for our global Traefik instance

│ └── docker-compose.yml

└── /gitlab-ci.yml # CI configuration fileStep-by-step guide:

- Setup Traefik as a Reverse Proxy

We configure Traefik as the reverse proxy running on a shared server. Traefik dynamically routes traffic to the correct services of each environment.

networks:

default:

external:

name: web

services:

traefik:

image: traefik:v2.4

container_name: traefik

command:

- "--log.level=DEBUG"

- "--api.dashboard=true"

- "--providers.docker=true"

- "--providers.docker.network=web"

- "--providers.docker.exposedbydefault=false"

- "--entrypoints.web.address=:80"

- "--entrypoints.websecure.address=:443"

- "--entrypoints.traefik.address=:8080"

- "--entrypoints.web.http.redirections.entryPoint.to=websecure"

- "--entrypoints.web.http.redirections.entryPoint.scheme=https"

- "--entrypoints.websecure.http.tls=true"

labels:

- "traefik.enable=true"

- "traefik.http.routers.dashboard.entrypoints=traefik"

- "traefik.http.routers.dashboard.rule=PathPrefix(`/api`) || PathPrefix(`/dashboard`)"

- "traefik.http.routers.dashboard.service=api@internal"

ports:

- "80:80"

- "443:443"

- "8080:8080"

volumes:

- letsencrypt:/letsencrypt

- /var/run/docker.sock:/var/run/docker.sock

logging:

options:

max-size: "10m"

max-file: "10"

restart: alwaystraefik/docker-compose.yml

- Define backend services

The core service configuration is defined in the docker-compose.yml file located inside the compose folder. Each service has its own configuration that can be overridden based on the environment (e.g., preview deployments or staging).

Here is the basic configuration for our app, api, dbapi, cms, and dbcms services:

app:

build:

context: ../app # Dockerfile path for the APP

expose:

- 8080

depends_on:

- api

labels:

- "traefik.enable=true"

- "traefik.http.routers.${COMPOSE_PROJECT_NAME}-app.entrypoints=web, websecure"

- "traefik.http.routers.${COMPOSE_PROJECT_NAME}-app.priority=1"

- "traefik.http.routers.${COMPOSE_PROJECT_NAME}-app.rule=Host(`${HOSTNAME}`, `www.${HOSTNAME}`)"

- "traefik.http.routers.${COMPOSE_PROJECT_NAME}-app.middlewares=${COMPOSE_PROJECT_NAME}-app-path"

- "traefik.http.middlewares.${COMPOSE_PROJECT_NAME}-app-path.chain.middlewares=${COMPOSE_PROJECT_NAME}-app-redirectregex,${COMPOSE_PROJECT_NAME}-app-stripprefixregex"

- "traefik.http.middlewares.${COMPOSE_PROJECT_NAME}-app-redirectregex.redirectregex.regex=^(https?://[^/]+/[a-zA-Z0-9]+)$$"

- "traefik.http.middlewares.${COMPOSE_PROJECT_NAME}-app-redirectregex.redirectregex.replacement=$${1}/"

- "traefik.http.middlewares.${COMPOSE_PROJECT_NAME}-app-redirectregex.redirectregex.permanent=true"

- "traefik.http.middlewares.${COMPOSE_PROJECT_NAME}-app-stripprefixregex.stripprefixregex.regex=/[a-zA-Z0-9]+"

api:

build: ../api

expose:

- 8080

depends_on:

- dbapi

labels:

- "traefik.enable=true"

- "traefik.http.routers.${COMPOSE_PROJECT_NAME}-api.entrypoints=web, websecure"

- "traefik.http.routers.${COMPOSE_PROJECT_NAME}-api.priority=4"

- "traefik.http.routers.${COMPOSE_PROJECT_NAME}-api.rule=Host(`${HOSTNAME}`, `www.${HOSTNAME}`)"

- "traefik.http.routers.${COMPOSE_PROJECT_NAME}-api.middlewares=${COMPOSE_PROJECT_NAME}-api-stripprefixregex"

- "traefik.http.middlewares.${COMPOSE_PROJECT_NAME}-api-stripprefixregex.stripprefixregex.regex=/[a-zA-Z0-9]+/api"

environment:

# your environment variables

- ...

dbapi:

...

dbcms:

...

cms:

build:

context: ./cms # Dockerfile path for the CMS

container_name: "cms"

labels:

- "traefik.enable=true"

- "traefik.http.routers.${COMPOSE_PROJECT_NAME}-cms.entrypoints=web, websecure"

- "traefik.http.routers.${COMPOSE_PROJECT_NAME}-cms.priority=5"

- "traefik.http.routers.${COMPOSE_PROJECT_NAME}-cms.rule=Host(`${HOSTNAME}`, `www.${HOSTNAME}`) && PathPrefix(`/cms`)"

- "traefik.http.middlewares.${COMPOSE_PROJECT_NAME}-cms-stripprefix.stripprefix.prefixes=/cms"

- "traefik.http.routers.${COMPOSE_PROJECT_NAME}-cms.middlewares=${COMPOSE_PROJECT_NAME}-cms-stripprefix"

environment:

# your environment variables

- ...

compose/docker-compose.yml

To ensure that our global Traefik instance running on our testing server can communicate with containers created for staging and preview deployments, we use Docker Compose’s network configuration. It enables communication between containers across isolated environments. In our case, we call the external network web .

services:

app:

networks:

- default

- web

api:

networks:

- default

- web

cms:

networks:

- default

- web

networks:

web:

external: truecompose/docker-compose.review.yml

- Configure GitLab CI

With our Docker Compose configuration in place, the next step is automating deploying preview environments using GitLab CI. We configure pipelines to deploy one for each merge request as well as the main branch.

As you will see, we make use of Docker Context to easily access our testing servers and deploy the services. It allows us to run docker commands on remote machines as if they were local.

stages:

- deploy

.deploy_compose:

stage: deploy

image: docker:23

services:

- docker:dind

before_script:

- apk add bash curl

# https://docs.gitlab.com/ee/ci/ssh_keys/#ssh-keys-when-using-the-docker-executor

- "command -v ssh-agent >/dev/null || ( apk --update add openssh-client )"

- eval $(ssh-agent -s)

- echo "$SSH_KEY" | tr -d '\r' | ssh-add -

- mkdir -p ~/.ssh

- chmod 700 ~/.ssh

- '[[ -f /.dockerenv ]] && echo -e "Host *\n\tStrictHostKeyChecking no\n\n" >> ~/.ssh/config'

- docker context import $DOCKER_CONTEXT_NAME $DOCKER_CONTEXT_FILE

- cd compose

- echo "$DOTENV" | tr -d '\r' > .env

script:

# To use variables inside the compose file they need to be defined in the environment that runs the compose command i.e. server environment.

# Don't mix up with the job environment variables which are available in the gitlab runner environment.

- HOSTNAME=$COMPOSE_HOSTNAME COMPOSE_PROJECT_NAME=$COMPOSE_NAME docker --context $DOCKER_CONTEXT_NAME compose -f docker-compose.yml -p $COMPOSE_NAME pull

- HOSTNAME=$COMPOSE_HOSTNAME COMPOSE_PROJECT_NAME=$COMPOSE_NAME docker --context $DOCKER_CONTEXT_NAME compose -f docker-compose.yml -p $COMPOSE_NAME build --no-cache

- HOSTNAME=$COMPOSE_HOSTNAME COMPOSE_PROJECT_NAME=$COMPOSE_NAME docker --context $DOCKER_CONTEXT_NAME compose -f docker-compose.yml -p $COMPOSE_NAME up -d

- docker --context $DOCKER_CONTEXT_NAME system prune -af

after_script:

# delete ssh key manually

- ssh-add -D

compose:staging:

extends: .deploy_compose

environment:

name: staging

url: https://staging.test.example.com

deployment_tier: staging

variables:

DOCKER_CONTEXT_NAME: "exmaple-test"

DOCKER_CONTEXT_FILE: "compose/test.dockercontext"

COMPOSE_NAME: "staging"

COMPOSE_HOSTNAME: "staging.test.example.com"

DOTENV: $DOTENV_STAGING

script:

# Similar script to compose:review but the dynamic env hostname is the same as compose hostname

- DYNAMIC_ENVIRONMENT_HOSTNAME=$COMPOSE_HOSTNAME

- !reference [compose:review, script]

rules:

- if: $CI_COMMIT_BRANCH == "main"

when: manual

allow_failure: true

compose:review:

extends: .deploy_compose

environment:

name: review/$CI_COMMIT_REF_SLUG

url: $DYNAMIC_ENVIRONMENT_URL

deployment_tier: testing

on_stop: "compose:review:stop"

variables:

DOCKER_CONTEXT_NAME: "test"

DOCKER_CONTEXT_FILE: "compose/test.dockercontext"

COMPOSE_NAME: $CI_COMMIT_REF_SLUG

COMPOSE_HOSTNAME: "$CI_COMMIT_REF_SLUG.test.example.com"

DOTENV: $DOTENV_TEST

before_script:

- !reference [.deploy_compose, before_script]

- DYNAMIC_ENVIRONMENT_HOSTNAME=$COMPOSE_HOSTNAME

- sed -i "s/BASE_URL=.*/BASE_URL=https:\/\/$DYNAMIC_ENVIRONMENT_HOSTNAME/" .env

# script copies the volume of the staging instance to the new review instance,

# so that we have already data to interact with in our review app (find the script below)

- ../scripts/copy_volumes.sh --context_name=$DOCKER_CONTEXT_NAME --compose_name=$COMPOSE_NAME

script:

- HOSTNAME=$DYNAMIC_ENVIRONMENT_HOSTNAME COMPOSE_PROJECT_NAME=$COMPOSE_NAME docker --context $DOCKER_CONTEXT_NAME compose -f docker-compose.yml -f docker-compose.review.yml -p $COMPOSE_NAME pull

- HOSTNAME=$DYNAMIC_ENVIRONMENT_HOSTNAME COMPOSE_PROJECT_NAME=$COMPOSE_NAME docker --context $DOCKER_CONTEXT_NAME compose -f docker-compose.yml -f docker-compose.review.yml -p $COMPOSE_NAME build --no-cache

# To avoid networking issues review app containers are removed and recreated

- ../scripts/remove_review_containers.sh --context_name=$DOCKER_CONTEXT_NAME --compose_name=$COMPOSE_NAME

- HOSTNAME=$DYNAMIC_ENVIRONMENT_HOSTNAME COMPOSE_PROJECT_NAME=$COMPOSE_NAME docker --context $DOCKER_CONTEXT_NAME compose -f docker-compose.yml -f docker-compose.review.yml -p $COMPOSE_NAME up -d

- docker --context $DOCKER_CONTEXT_NAME system prune -af

# Create deploy.env file with the dynamic env URL to be reported back to GitLab as the env URL in artifacts:reports

- echo "DYNAMIC_ENVIRONMENT_URL=https://$DYNAMIC_ENVIRONMENT_HOSTNAME" >> deploy.env

artifacts:

reports:

dotenv: compose/deploy.env

rules:

- !reference [.review_rules, rules]

allow_failure: true

compose:review:stop:

extends: .deploy_compose

environment:

name: review/$CI_COMMIT_REF_SLUG

deployment_tier: testing

action: stop

variables:

GIT_STRATEGY: none

DOCKER_CONTEXT_NAME: "test"

DOCKER_CONTEXT_FILE: "compose/test.dockercontext"

COMPOSE_NAME: $CI_COMMIT_REF_SLUG

COMPOSE_HOSTNAME: "$CI_COMMIT_REF_SLUG.test.example.com"

DOTENV: $DOTENV_TEST

before_script:

- apk add git

- rm -rf /builds/<PROJECT NAME>

- mkdir /builds/<PROJECT NAME>

- git clone $CI_REPOSITORY_URL --single-branch /builds/<PROJECT NAME>/

- cd /builds/<PROJECT NAME>/

- !reference [.deploy_compose, before_script]

script:

- docker --context $DOCKER_CONTEXT_NAME compose -p $COMPOSE_NAME down -v

- docker --context $DOCKER_CONTEXT_NAME system prune -af

- docker --context $DOCKER_CONTEXT_NAME volume prune -af --filter label=${COMPOSE_NAME}

rules:

- !reference [.review_rules, rules]

allow_failure: true

.review_rules:

rules:

- if: $CI_COMMIT_REF_PROTECTED == "false" && $CI_PIPELINE_SOURCE == "push"

when: manualgitlab-ci.yml

As you can see, each compose:XXX job extends the .deploy_compose job, so we can reuse some of the configuration, especially the part for enabling the GitLab Docker executor communicating with our remote server via ssh. The script part will be overwritten by the script of the job which references the .deploy_compose job.

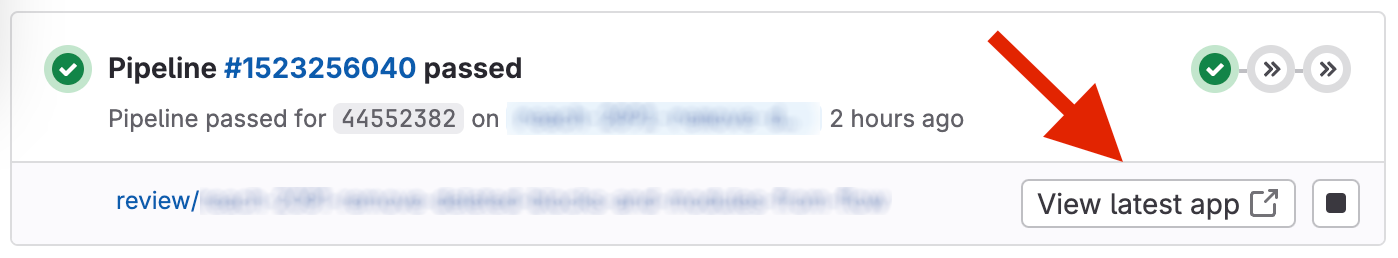

In our compose:review script we also leverage the GitLab artifacts report feature.

compose:review:

...

script:

- ...

- echo "DYNAMIC_ENVIRONMENT_URL=https://$DYNAMIC_ENVIRONMENT_HOSTNAME" >> deploy.env

artifacts:

reports:

dotenv: compose/deploy.envIn this script the URL to our preview deployment instance is saved as DYNAMIC_ENVIRONMENT_URL to the deploy.env file and reported back to GitLab as a report artifact. This makes the DYNAMIC_ENVIRONMENT_URL available as a variable within GitLab, allowing the URL of the preview deployment to be shown in GitLab’s interface, so developers and reviewers can easily access the deployed instance of the review app.

In the compose:review job the utility script for copying docker volumes is used. It copies the contents of the current staging dbcms and dbapi services to our newly created preview deployment, so our instance is pre-populated with data.

while [ $# -gt 0 ]; do

case "$1" in

--context_name=*)

context_name="${1#*=}"

;;

--compose_name=*)

compose_name="${1#*=}"

;;

esac

shift

done

export CONTEXT_NAME="${context_name:?--context_name is missing.}"

export COMPOSE_NAME="${compose_name:?--compose_name is missing.}"

declare -a volumes=("dbcms" "dbapi")

for i in "${volumes[@]}"

do

if [[ -z $(docker --context $CONTEXT_NAME volume ls | grep ${COMPOSE_NAME}_${i}) ]]; then

echo "${COMPOSE_NAME}_${i} volume does not exist, creating volume..."

docker --context $CONTEXT_NAME volume create ${COMPOSE_NAME}_${i} --label=${COMPOSE_NAME}

docker --context $CONTEXT_NAME run --rm -i -v main_${i}:/from -v ${COMPOSE_NAME}_${i}:/to alpine ash -c "cp -r from/. /to"

else

echo "${COMPOSE_NAME}_${i} volume exists."

fi

donescripts/copy-volumes.sh

The compose:review:stop job for taking down the preview deployment instance might look a little confusing. When compose:review creates a preview deployment, it’s set up with the on_stop attribute pointing to compose:review:stop. This way, GitLab knows to trigger the compose:review:stop job to automatically shut down the environment and remove associated Docker resources, including volumes and networks, once the preview deployment is no longer needed.

This job inherits several setup steps from .deploy_compose, including preparing Docker and SSH contexts. However, it overrides the script to execute a sequence that removes Docker containers, prunes unused resources, and cleans volumes specific to the review environment. It ensures that unnecessary resources aren’t left running. For completeness, here's the remove-review-containers.sh script:

while [ $# -gt 0 ]; do

case "$1" in

--context_name=*)

context_name="${1#*=}"

;;

--compose_name=*)

compose_name="${1#*=}"

;;

esac

shift

done

export CONTEXT_NAME="${context_name:?--context_name is missing.}"

export COMPOSE_NAME="${compose_name:?--compose_name is missing.}"

echo $(docker --context $CONTEXT_NAME container ls -aq --filter="name=$COMPOSE_NAME")

if [[ -z $(docker --context $CONTEXT_NAME container ls -aq --filter="name=$COMPOSE_NAME") ]]; then

echo "Containers don't exit. Creating..."

else

echo "Removing existing container before recreating..."

docker --context $CONTEXT_NAME container rm --force $(docker --context $CONTEXT_NAME container ls -aq --filter="name=$COMPOSE_NAME")

fiscripts/remove-review-containers.sh

That was it! Of course this setup is only one of many how you can spin up preview deployments. For us at Hybrid Heroes, this pattern proved to be very reliable especially for complex projects where messing with staging data could create unwanted consequences, hence slowing down the development process.

Conclusion

In this post, we demonstrated how to configure preview deployments for a monorepo project using Traefik, Docker Compose, and GitLab CI. This setup allows developers to automatically deploy isolated environments for each branch, making it easier to test, review, and collaborate across different parts of the project. It allows also non-technical stakeholders to easily access your application's services. If you have any questions or need further assistance, feel free to reach out!

Happy coding! 🚀