Building an AI Chat App with React Native and Azure AI Studio

While AI has been adopted for a wide range of applications from automating processes to operating self-driving cars for quite some time, only with the advent of generative AI and most importantly large language models (LLM) such as OpenAI's ChatGPT its capabilities have been made accessible to average users.

Businesses are already leveraging LLMs in various roles, some of these are:

- Customer Support: LLMs can be integrated into chatbots and virtual assistants and respond to customer queries, reducing wait times and improving customer satisfaction.

- Sales Assistance: LLMs can provide personalized product recommendations, enhancing user experience and engagement.

- Marketing: LLMs can generate high-quality, original content for articles, reports, and marketing materials.

- Legal: LLMs can assist in drafting, reviewing, and ensuring compliance of legal documents, contracts, and policies.

- Knowledge Management: LLMs can streamline the collection, organization, and distribution of knowledge, making it more accessible and thus increasing operational efficiency.

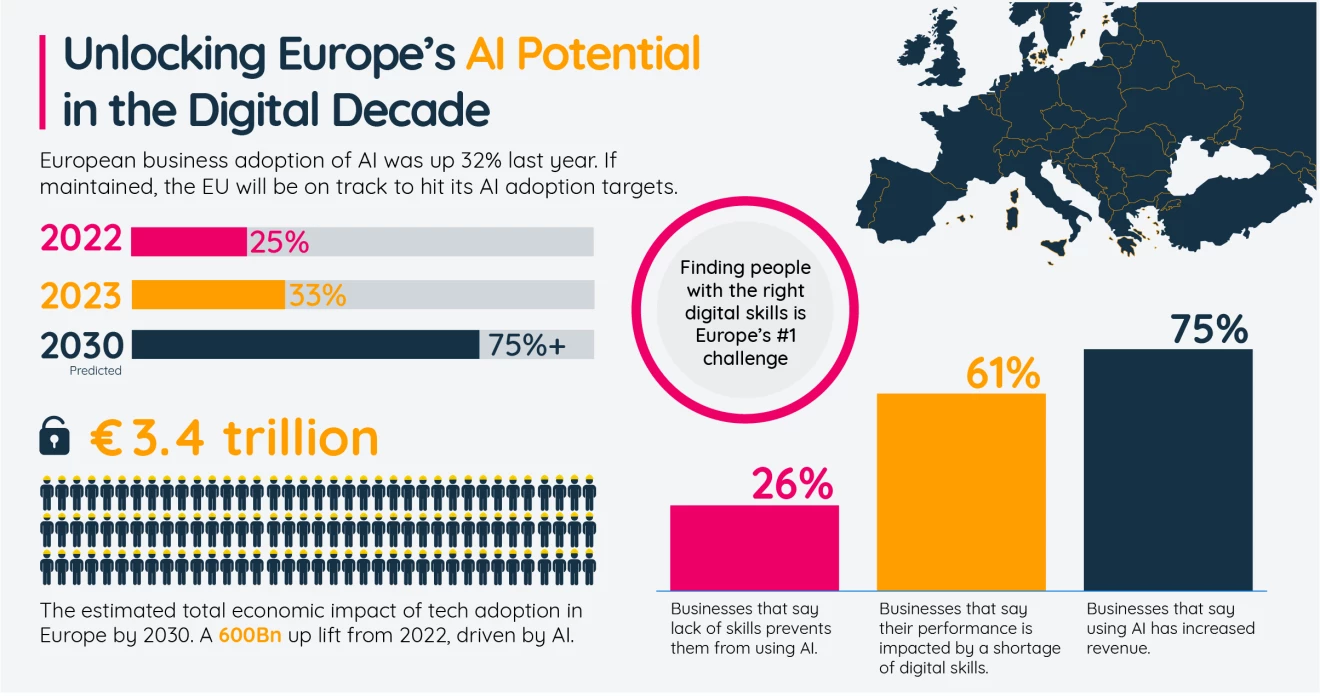

Recent numbers from Amazon Web Services suggest that over a third of European companies have adopted AI in 2023, forecasting an adoption rate of over 75% in 2030.

The technology behind AI

If you are exploring the use of LLMs for your business, first it's important to understand some technical fundamentals.

What is an LLM?

LLMs are AI systems designed to understand and generate text. LLMs are trained on extensive datasets comprising a wide array of text from the internet, including books, articles, and websites, enabling them to learn the statistical properties of language, including grammar, syntax, and even some aspects of common sense reasoning and world knowledge.

Despite their capabilities, LLMs have limitations, most notably generating plausible but incorrect answers (hallucination) and lacking up-to-date information or specialized knowledge that was not part of the training dataset.

The most popular LLM nowadays is ChatGPT from OpenAI. But there are various alternatives both under proprietary and open-source licenses, some of which have capabilities as powerful as ChatGPT according to Chatbot arena leaderboards:

- Claude (proprietary) from Anthropic

- Gemini (proprietary) and Gemma (open source) from Google

- Llama (open source) from Meta

- Mistral and Mixtral (both open source) from Mistral AI

And many more.

Teaching an LLM about your proprietary data using Retrieval-augmented generation

As mentioned above a big limitation of LLMs is the lack of specialized knowledge. In simple cases it might work to pass this knowledge as context together with the request. This however is limited by the context size (the maximum size of the request) the LLM can process. You could also train a custom LLM with your specialized knowledge. Because this is substantially more costly it is not very economic to do this for dynamic datasets. If the LLM needs access to a large amount of dynamic information such as a product catalogue or a document library a more sophisticated approach is required:

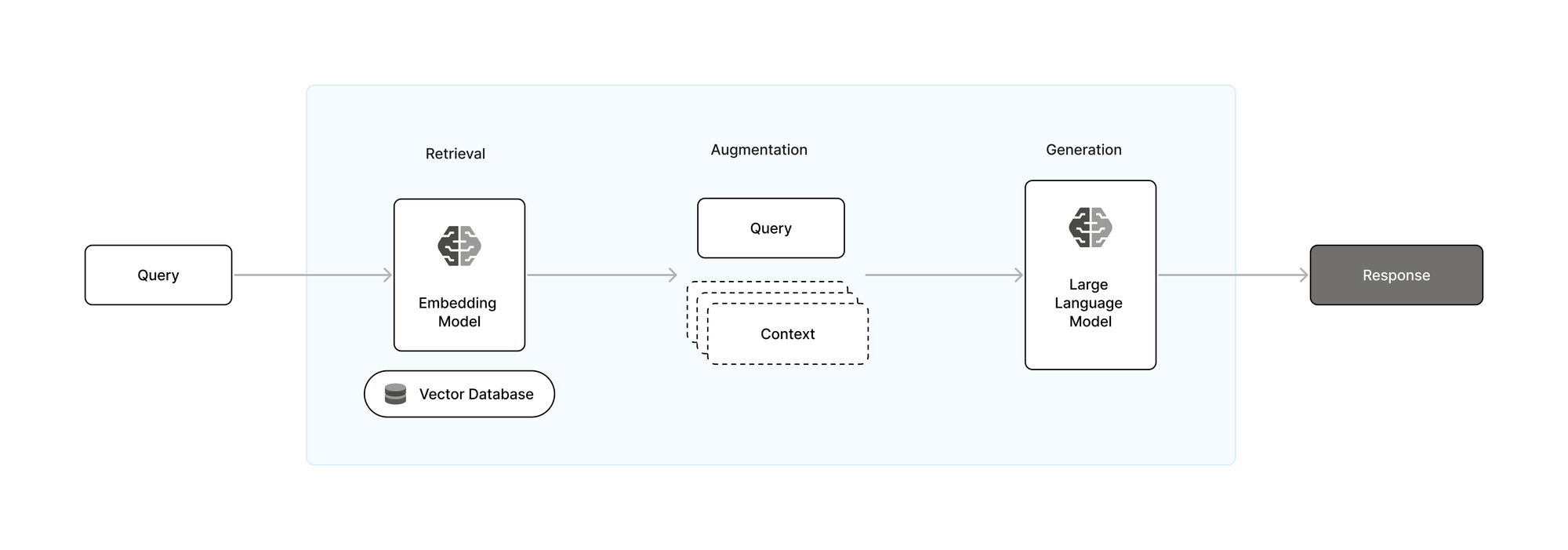

The core idea behind Retrieval-augmented Generation (RAG) is to enhance the ability of LLMs, by dynamically incorporating external information during the generation process. Therefore, a retrieval stage is incorporated before the generation stage.

The retrieval stage is quickly sifting through vast amounts of data to find the pieces of information most relevant to the request. This is often powered by technologies such as a vector database and an embedding model, where queries and documents are represented as vectors in a high-dimensional space, allowing for efficient similarity matching. Once the relevant information is retrieved, it is fed into the generation stage, which synthesizes the input query and the retrieved documents to produce a coherent and contextually enriched response. This approach not only significantly improves the quality and relevance of the generated text but also allows the model to stay updated with the latest information without the need for constant retraining.

The market for RAG solutions is currently evolving at an euqal pace as the LLM market. There are many proprietary vector databases such as Azure AI Search as well as open-source solutions such as Chroma. There are also general-purpose databases supporting vector data such as Solr, ElasticSearch or MongoDB. Embedding models are offered by all major providers of LLMs.

The cost of running a RAG system

Training and also operation (inference) of LLMs and RAG systems is very compute intensive and requires specialized hardware. While it is possible to buy or rent the necessary servers it is often more cost effective to utilize cloud-based solutions that scale with your specific usage.

All leading cloud service providers are offering solutions for running AI systems. Microsoft Azure is a leading provider of AI-related cloud services, and thanks to their partnership with OpenAI is the only provider offering seamless access to ChatGPT.

Building an AI chat mobile app

To demonstrate the implementation of AI for a typical business case in the field of knowledge management, we will be building a chatbot that can answer employees' questions about the processes and workflows of a company. As a dataset we will be using the Hybrid Heroes company handbook (600+ pages). We will be using Azure AI Studio to deploy a RAG system and use React Native to provide a mobile app that acts a user interface.

Deploying a RAG system with our own data

Azure AI Studio provides a user-friendly platform for building, training, and deploying AI systems, leveraging both code-based and no-code solutions to accommodate data scientists and developers across various expertise levels. Using AI studio, in a few steps, we can deploy a RAG system using popular OpenAI models and upload our own data.

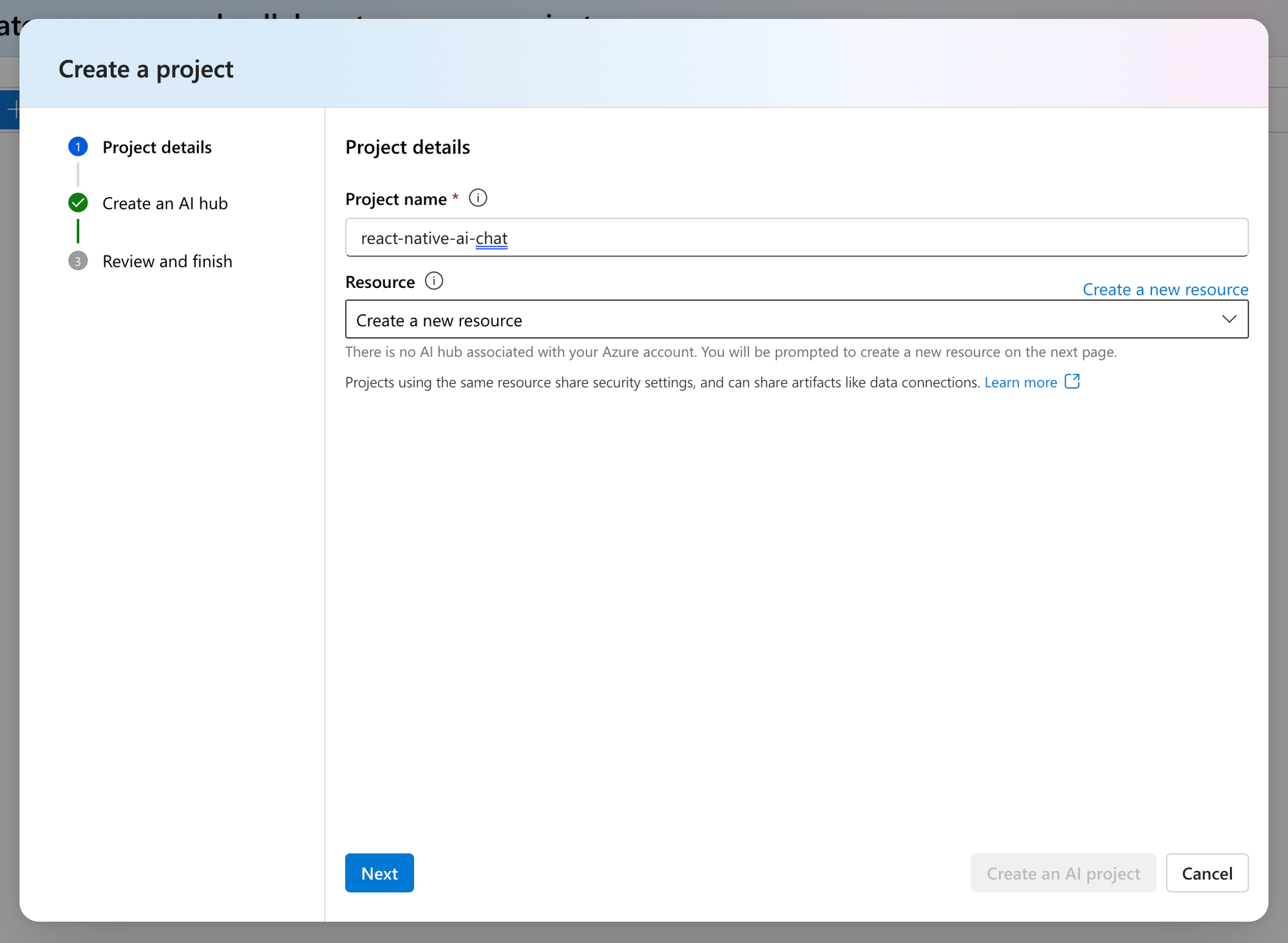

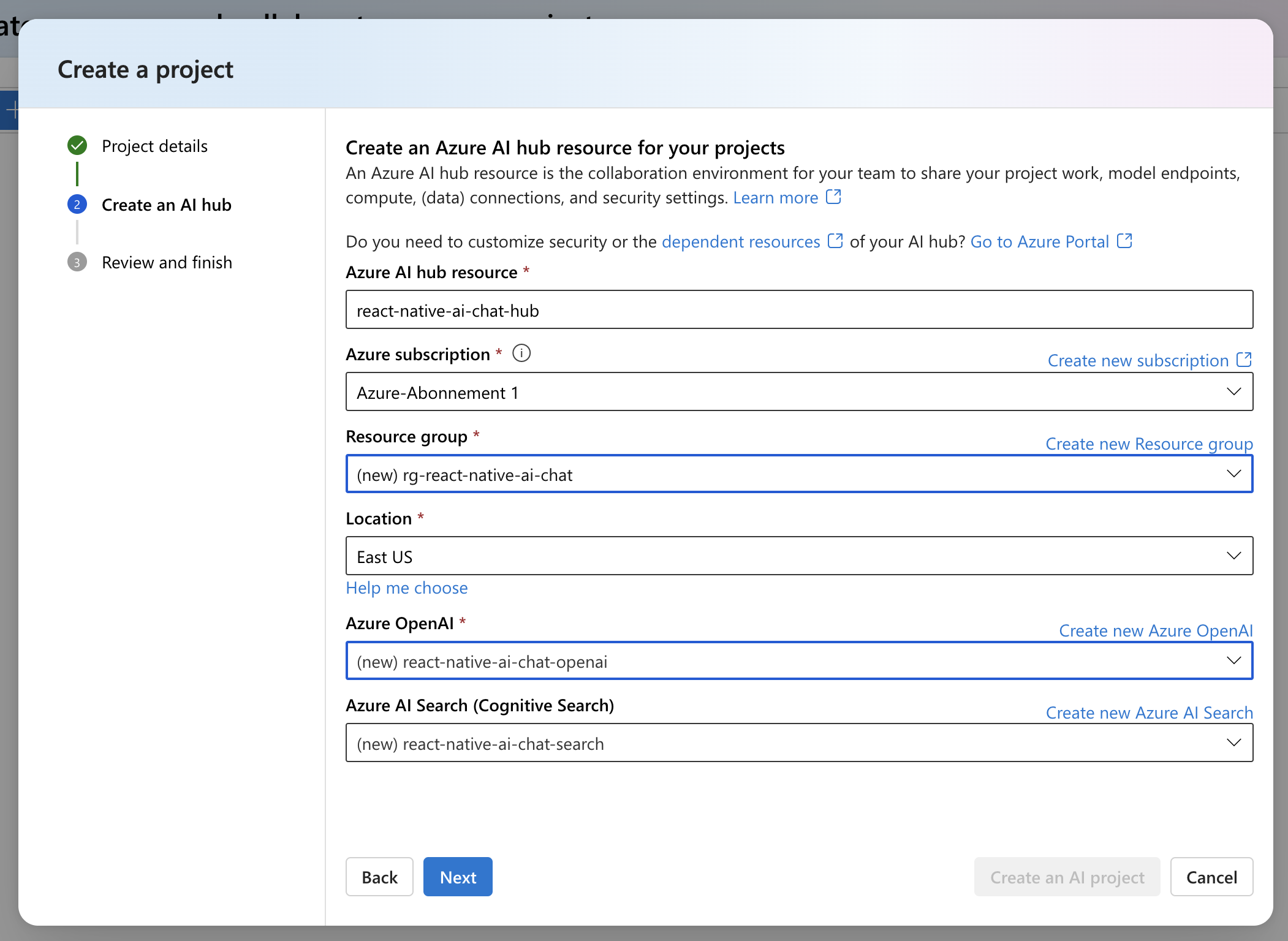

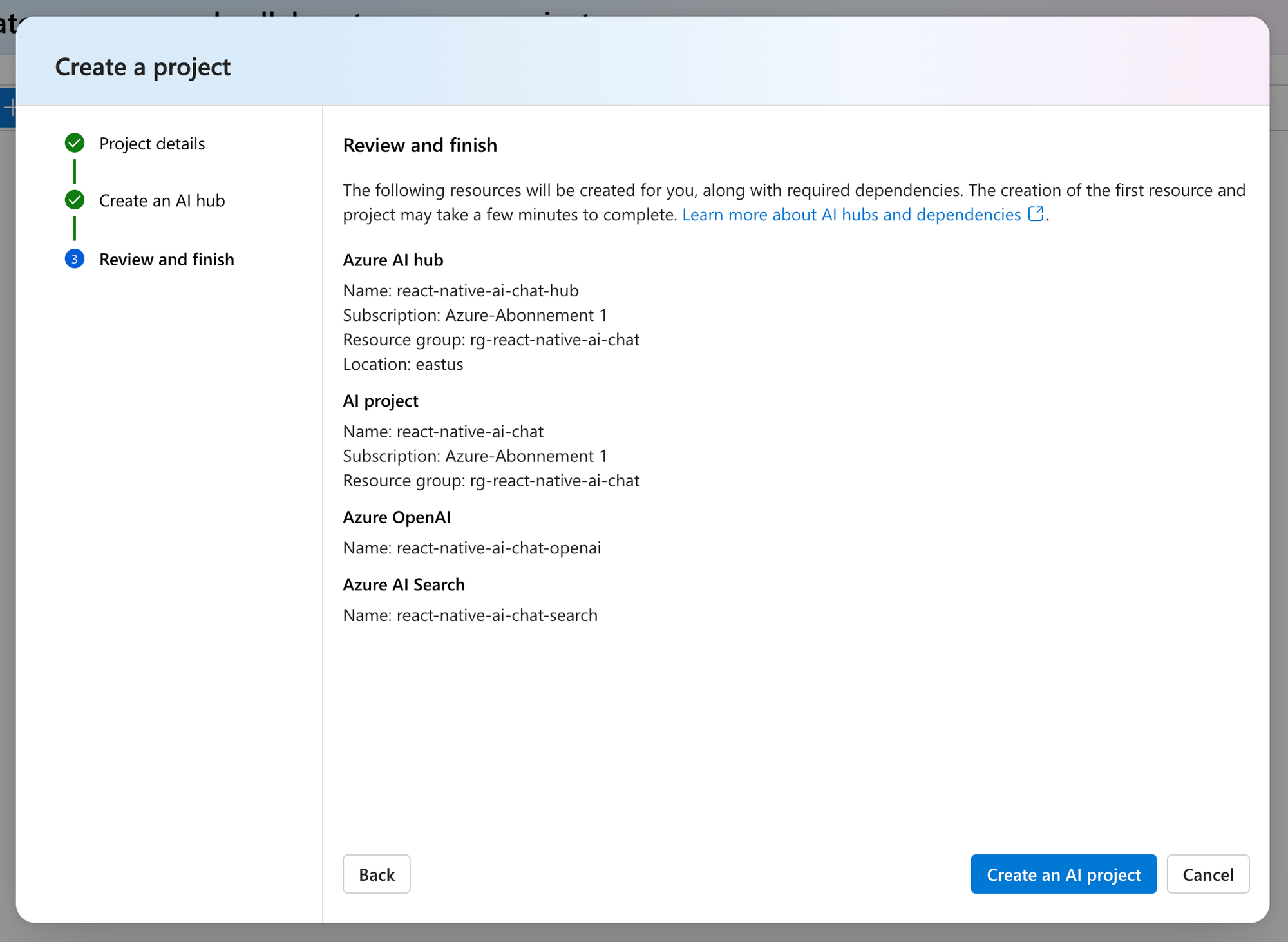

First, we need to create a new AI project and Azure resource. At the time of writing Azure AI Studio is in public preview. Keep in mind that this might affect the regional availability of services.

Project creation in Azure AI Studio

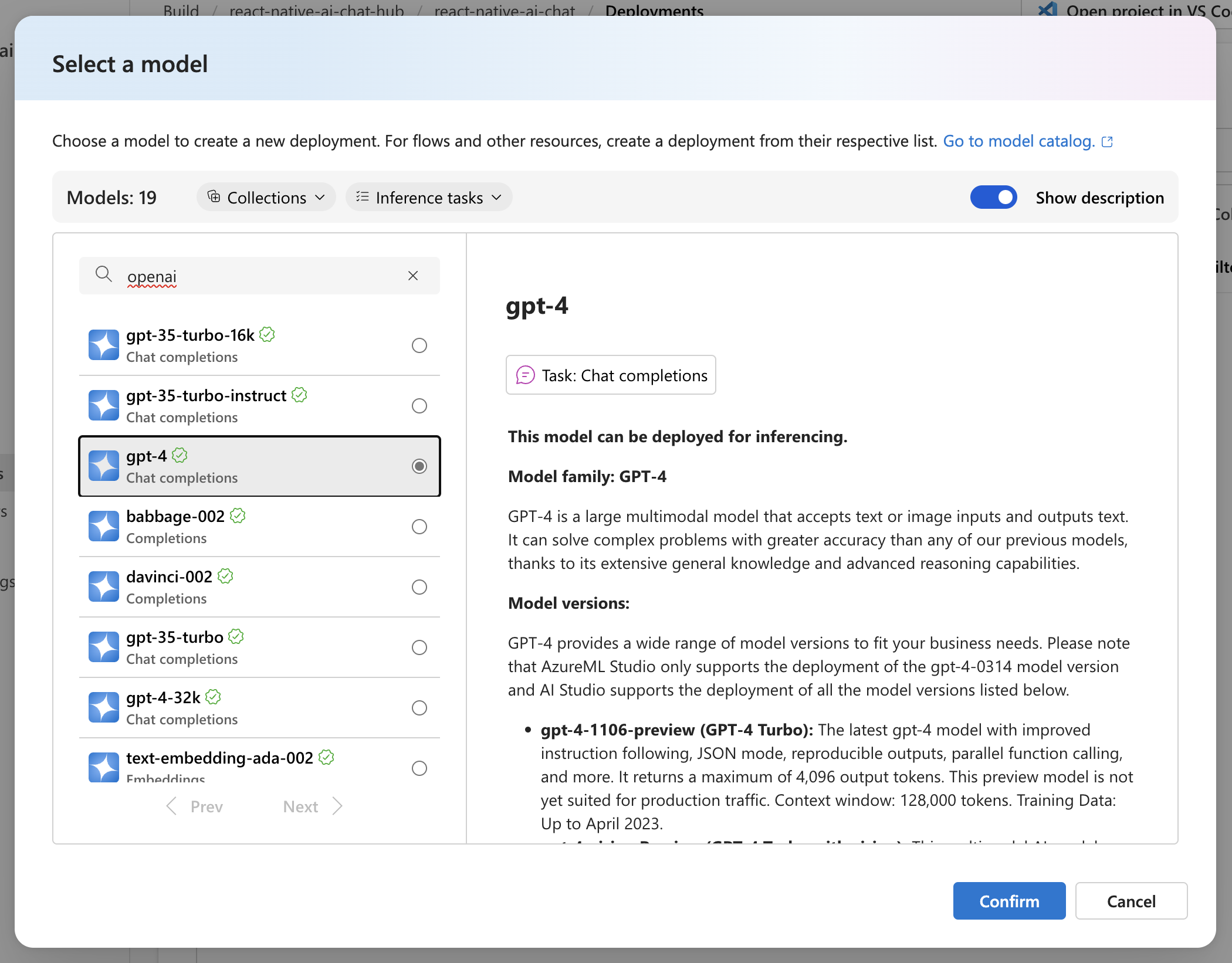

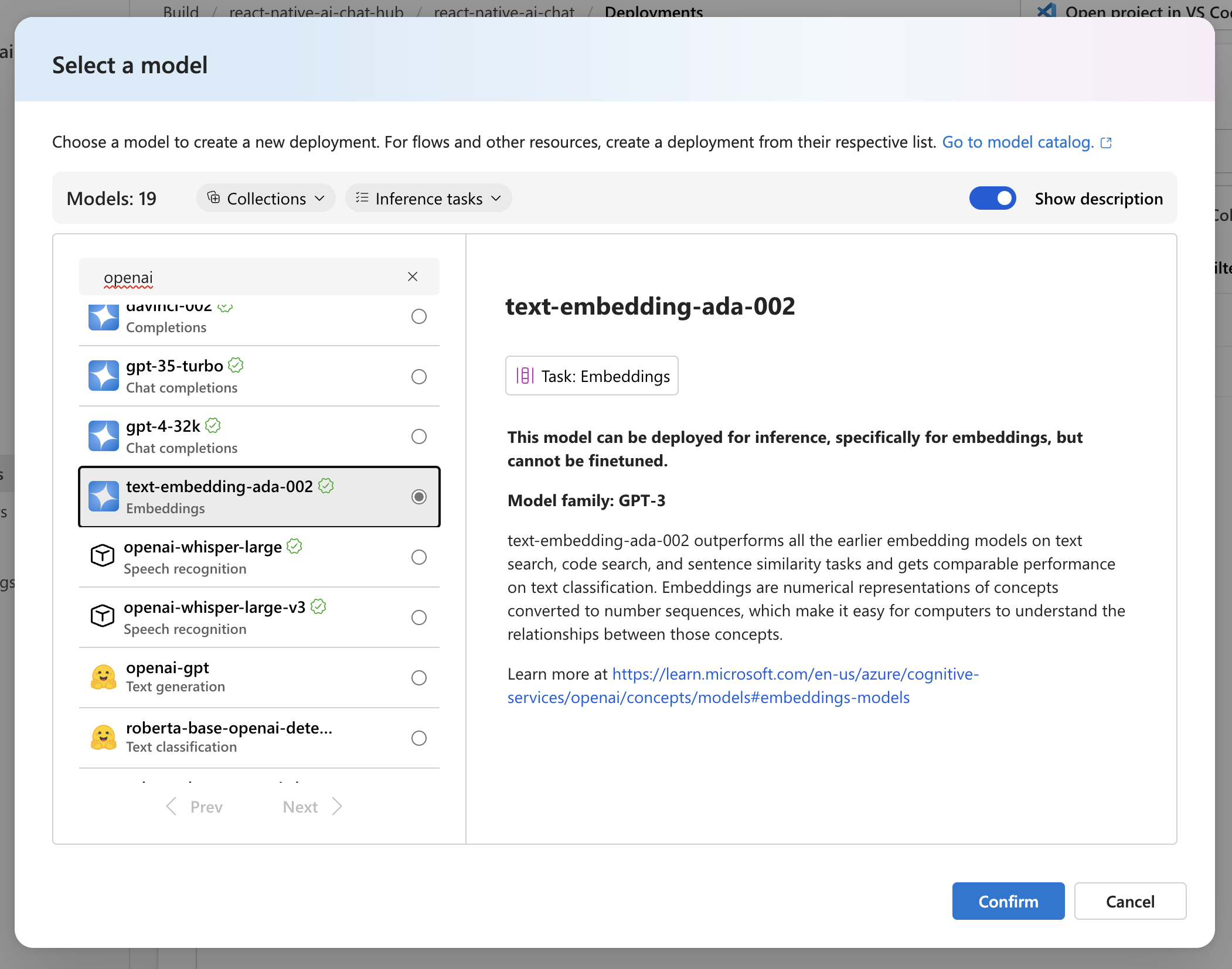

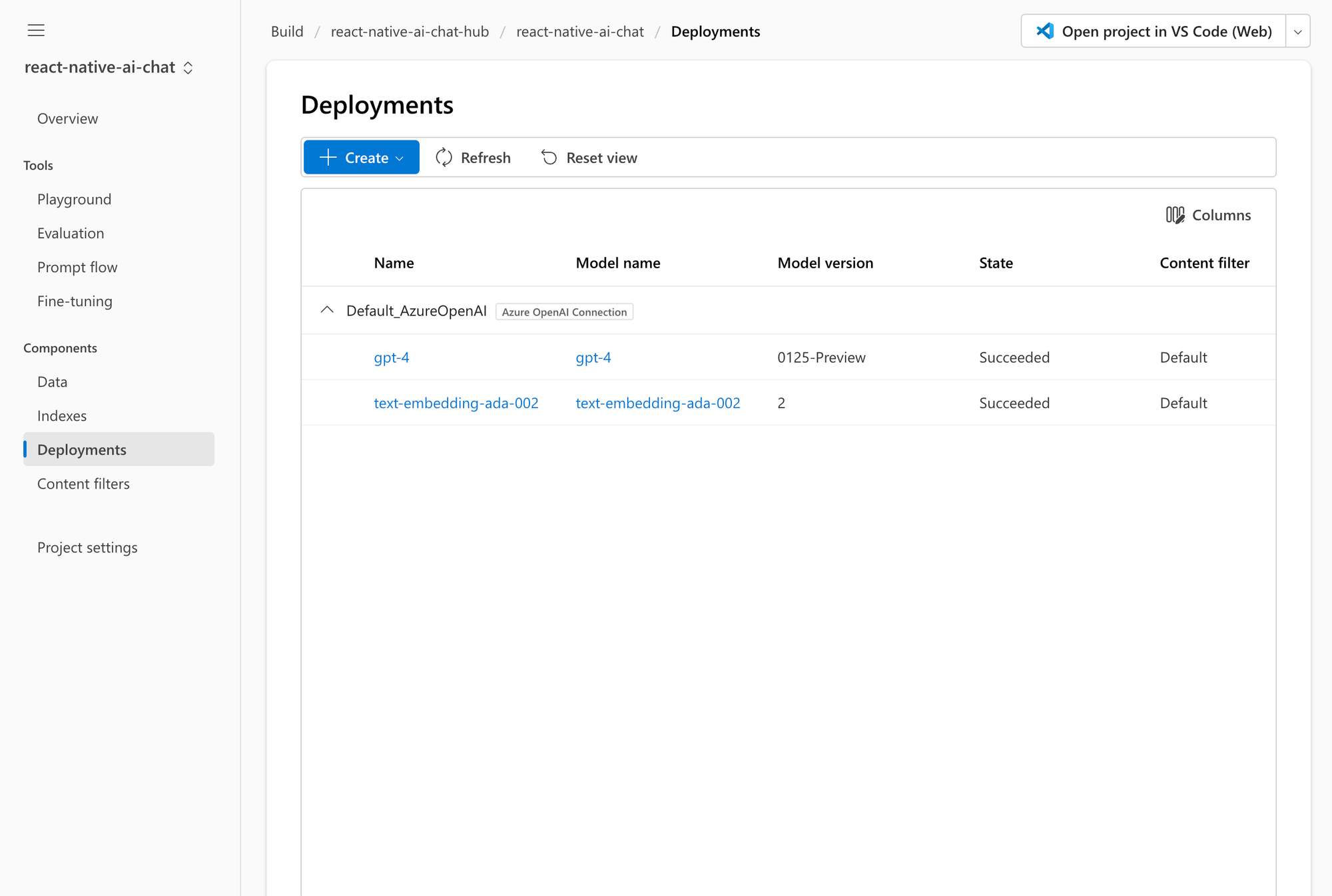

Once the project is created, we are directed to the project overview. Before we can take the next steps, we need to deploy some AI models. We will be using OpenAI's GPT4 as the LLM and OpenAI's ADA to create vectors for our company handbook and search queries. We can deploy these using the deployments panel.

Model deployment in Azure AI Studio

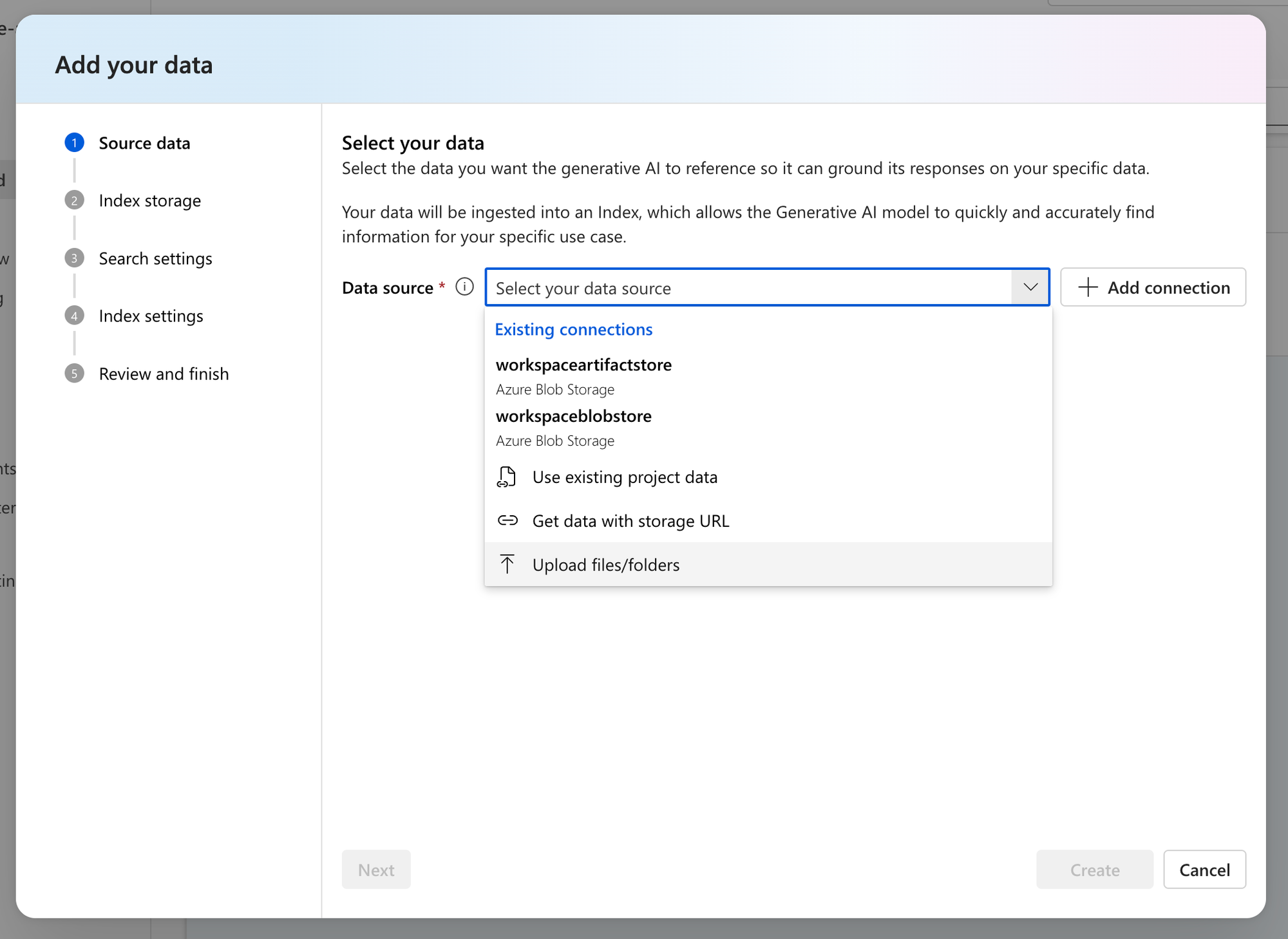

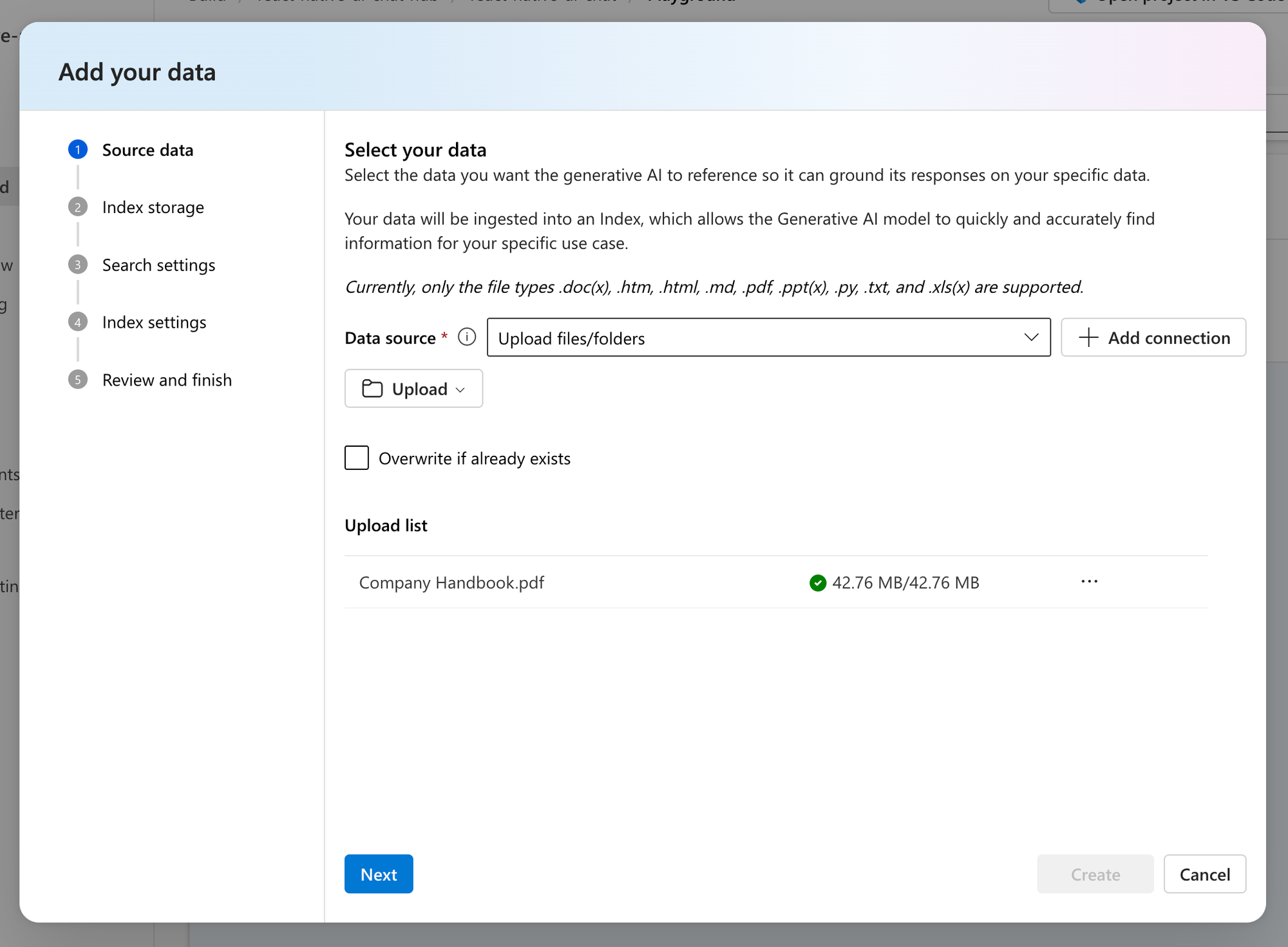

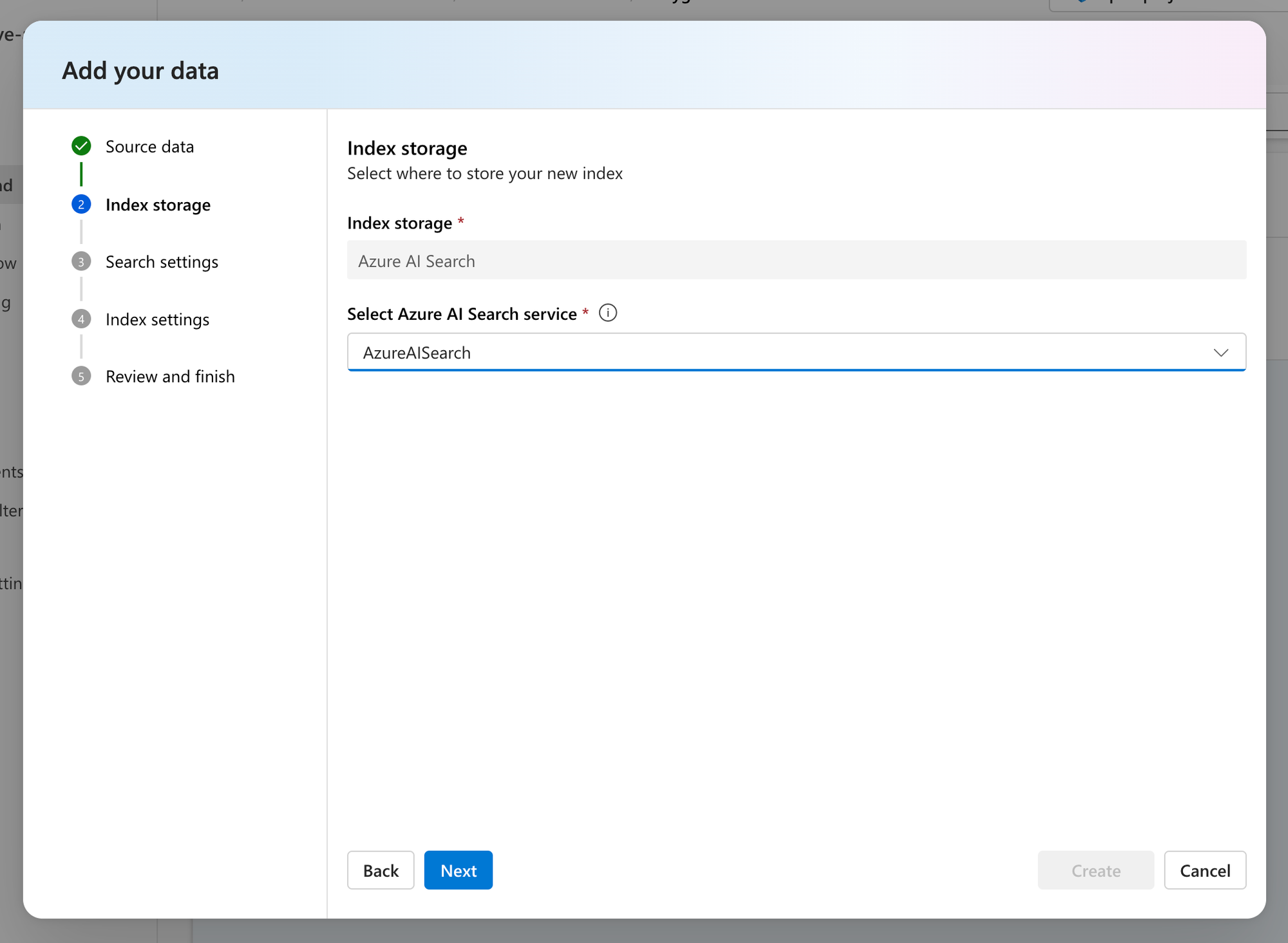

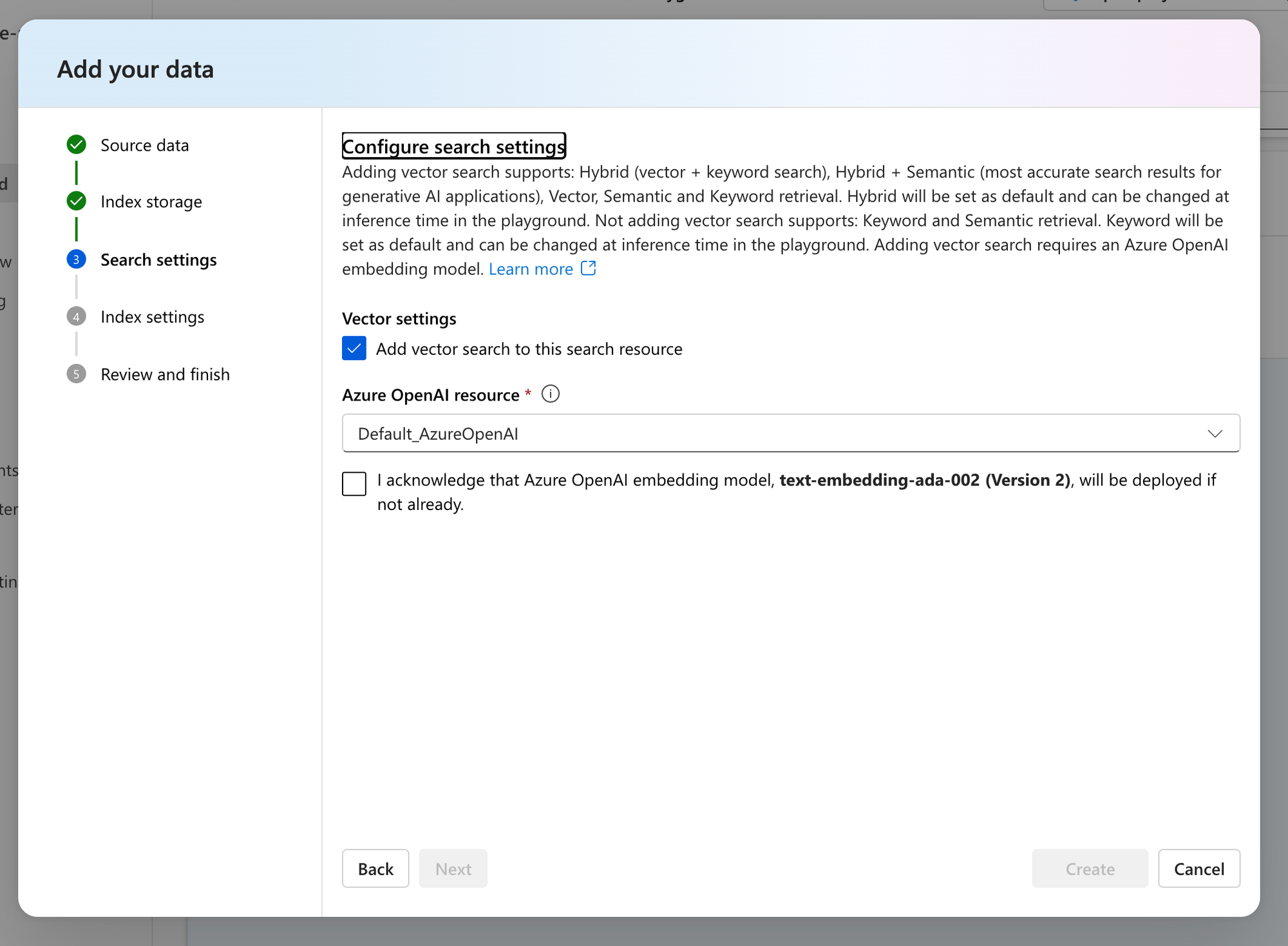

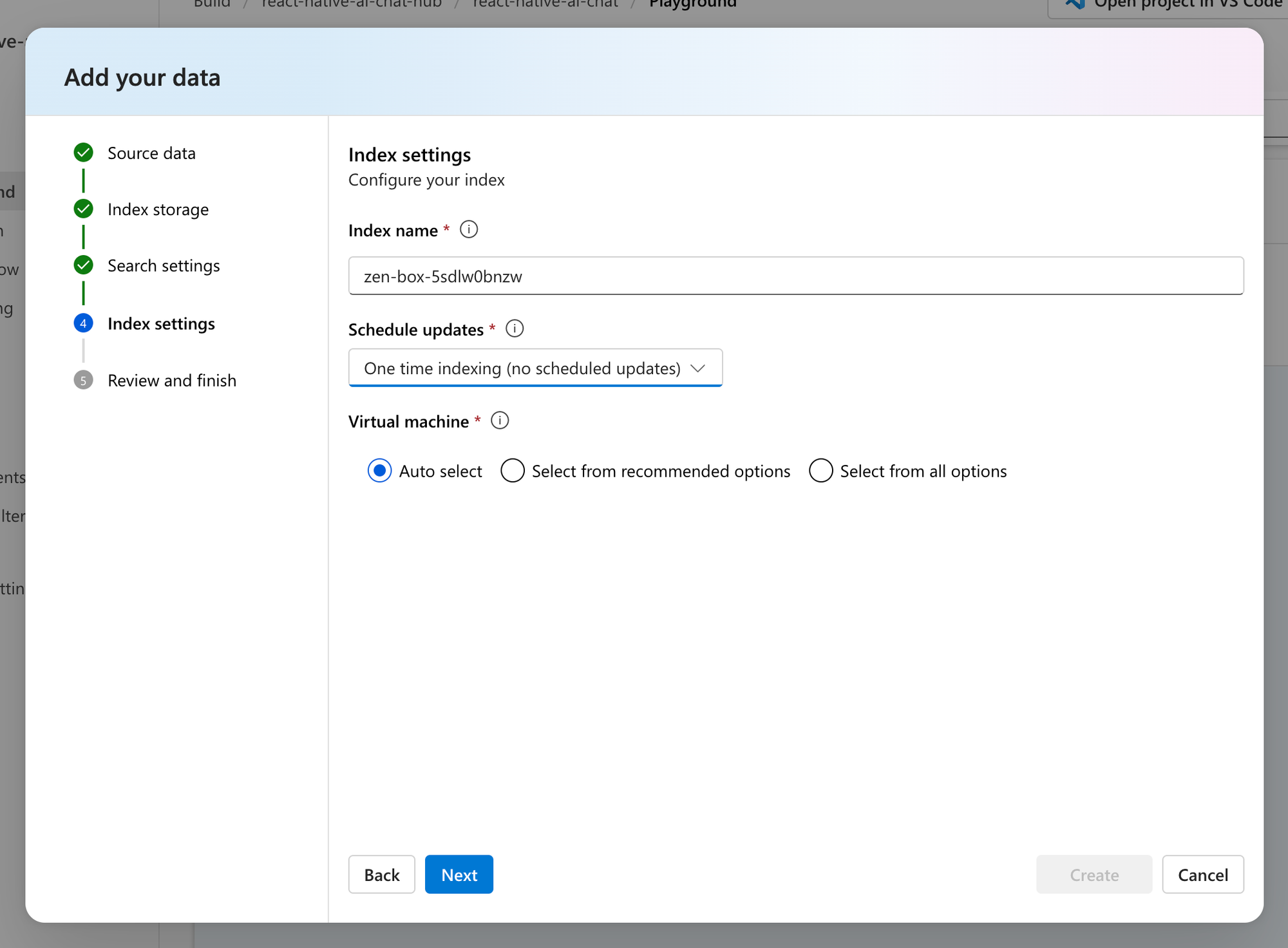

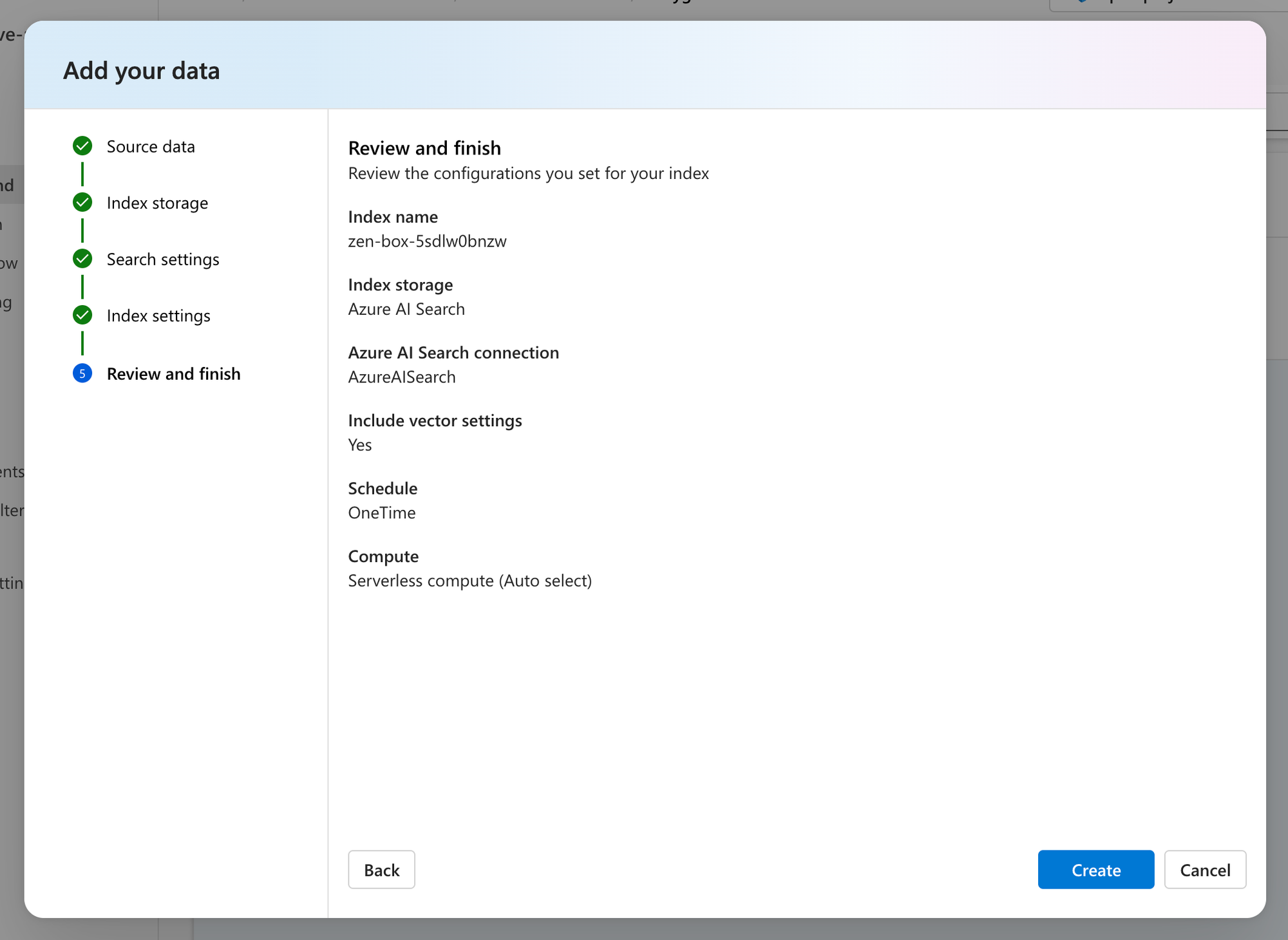

Next, we need to upload our data. To do this we need to head to the playground panel and bring up the dialog to add our data. As the data source we are selecting to upload our own data and select the file for our company handbook. In the next steps we are configuring Azure AI Search as the vector database and ADA as the embedding model for our dataset.

Uploading data to Azure AI Studio

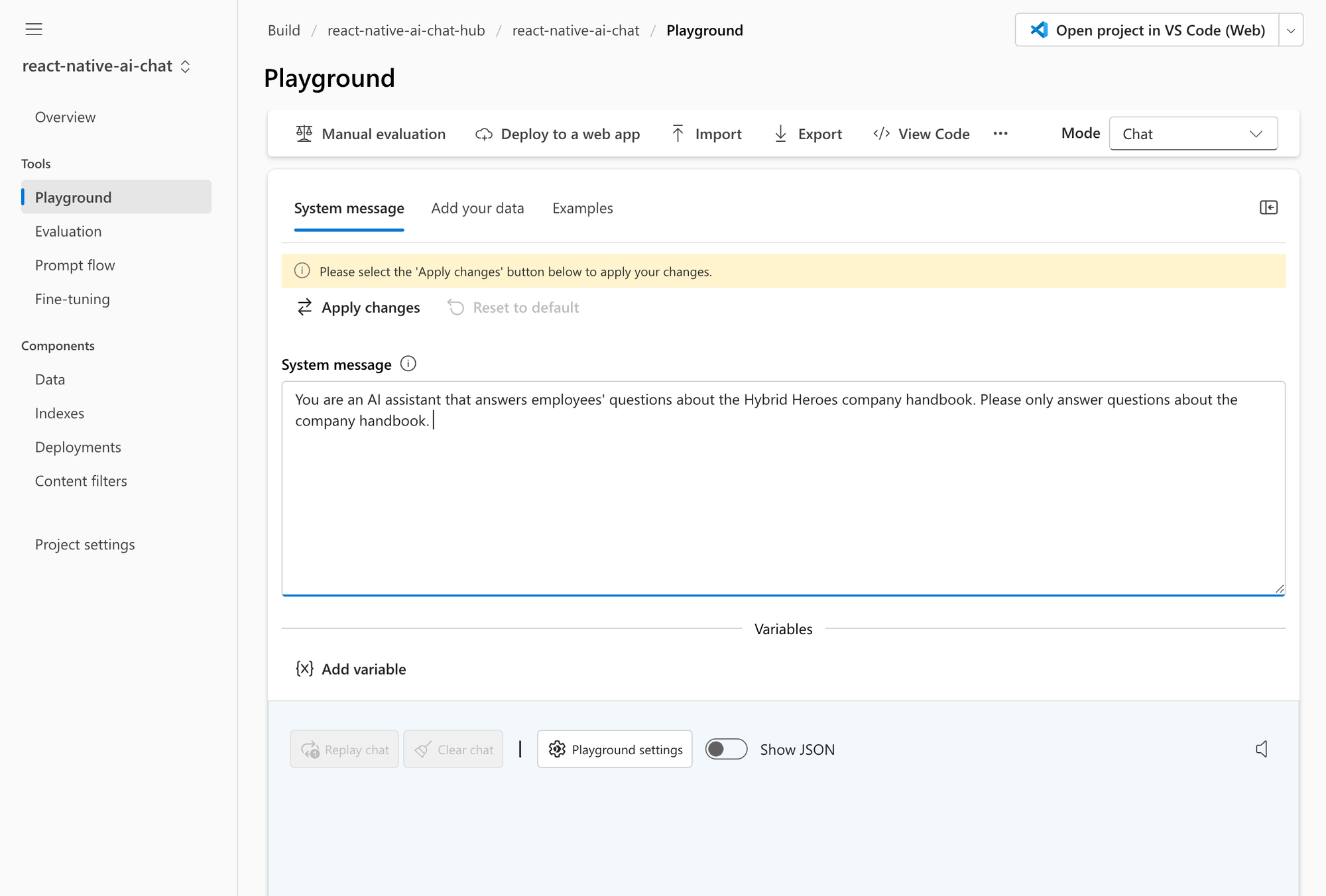

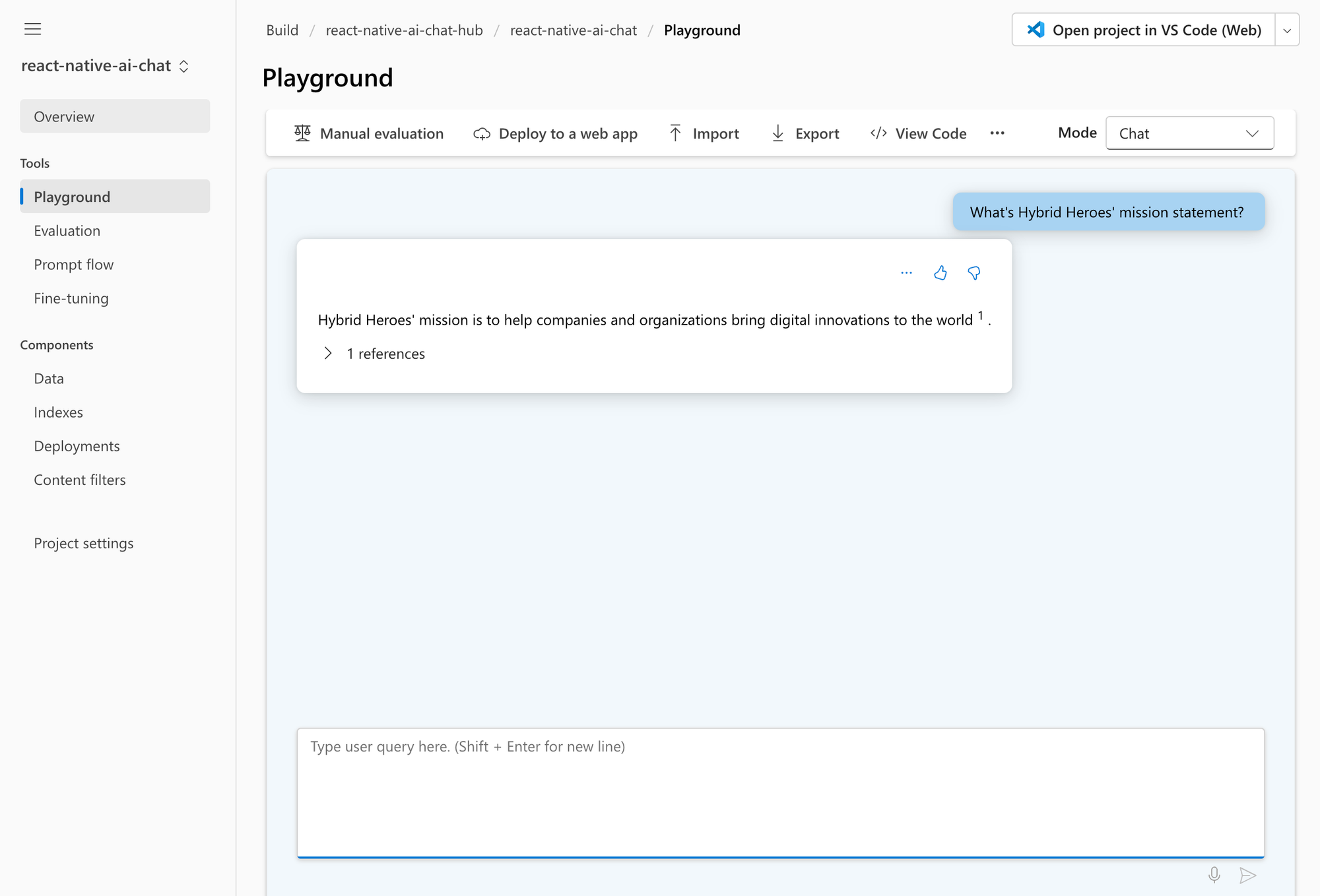

While our embedding model is ingesting our data into the vector database, we are heading over to the system message and give our AI chatbot some personality by crafting a prompt that describes its desired behavior. Finally, as soon as the data has been loaded into AI Search we can use the playground application to verify our RAG system works as expected by asking questions about our company handbook.

Creating mobile app user interface

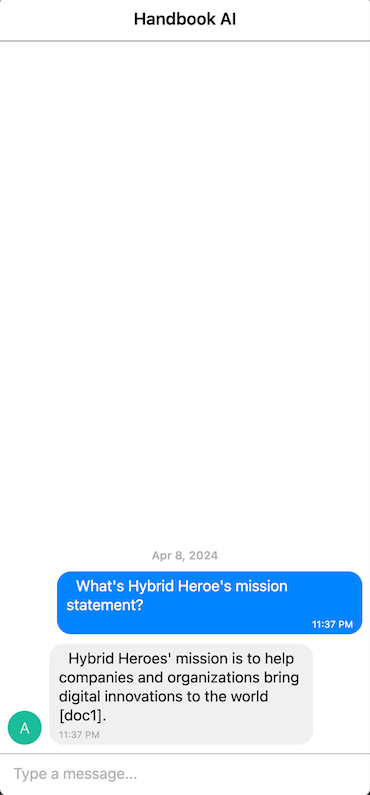

Most use cases require the AI chat to be integrated within an existing user interface such as a mobile app. To demonstrate this, we will be building a simple mobile app using React Native. React Native is a great framework because it supports creating iOS, Android, and web apps amongst other platforms. And thanks to Gifted Chat, a UI framework for building chat interfaces, React Native is particularly suited for building our AI chat app.

First, install Gifted Chat and create a basic chat interface.

import { useState } from "react";

import { SafeAreaView, View, Text, StyleSheet } from "react-native";

import { GiftedChat, IMessage } from "react-native-gifted-chat";

export default function App() {

const [messages, setMessages] = useState<IMessage[]>([]);

const getChatResponse = async (currentMessages: IMessage[]) => {

// TODO

};

const onSend = async (question = []) => {

const updatedMessages = GiftedChat.append(messages, question);

setMessages(updatedMessages);

const response = await getChatResponse(updatedMessages);

setMessages(GiftedChat.append(updatedMessages, response));

};

return (

<SafeAreaView style={styles.container}>

<View style={styles.header}>

<Text style={styles.title}>Handbook AI</Text>

</View>

<GiftedChat

messages={messages}

onSend={(newMessages) => onSend(newMessages)}

user={{

_id: 1,

}}

/>

</SafeAreaView>

);

}

const styles = StyleSheet.create({

container: {

flex: 1,

},

header: {

height: 48,

borderBottomWidth: 1,

borderBottomColor: "gray",

justifyContent: 'center',

alignItems: 'center',

},

title: {

fontSize: 18,

fontWeight: "600",

}

})

Then, install the Azure Open AI client library and connect the user interface to our AI Studio project.

...

import { OpenAIClient, AzureKeyCredential } from "@azure/openai";

export default function App() {

...

const getChatResponse = async (currentMessages: IMessage[]) => {

const client = new OpenAIClient(

ENV.AZURE_OPENAI_ENDPOINT,

new AzureKeyCredential(ENV.AZURE_OPENAI_API_KEY)

);

setLoading(true);

const completions = await client.getChatCompletions(

"gpt-4",

[

{

role: "system",

content:

"You are an AI assistant that answers employees' questions about the Hybrid Heroes company handbook. Please only answer questions about the company handbook.",

},

...currentMessages.map((message) => ({

role: message.user._id === 1 ? "user" : "assistant",

content: message.text,

})).reverse(),

],

{

azureExtensionOptions: {

extensions: [

{

type: "azure_search",

endpoint: ENV.AZURE_AI_SEARCH_ENDPOINT,

indexName: ENV.AZURE_AI_SEARCH_INDEX,

authentication: {

type: "api_key",

key: ENV.AZURE_AI_SEARCH_API_KEY,

},

queryType: "vector_simple_hybrid",

inScope: true,

roleInformation:

"You are an AI assistant that answers employees' questions about the Hybrid Heroes company handbook. Please only answer questions about the company handbook. ",

strictness: 3,

topNDocuments: 5,

embeddingDependency: {

type: "deployment_name",

deploymentName: "text-embedding-ada-002"

}

},

],

},

}

);

const response: IMessage = {

_id: new Date().getTime(),

text: completions.choices[0].message.content,

createdAt: new Date(),

user: {

_id: 2,

name: "AI",

},

};

return [response];

};

...

}

...

With just a few lines of code we have replicated the functionality of Azure AI Studio's playground application where we can ask our chatbot questions about our company handbook.

Next Steps

Keep in mind that this is a simplified example and should not be deployed to production without further improvements:

- Reflecting changes in the dataset: The vector database should be updated at a fixed interval or dynamically when changes to the dataset are made.

- Finetune RAG and LLM parameters: There is a multitude or parameters both in the LLM and the RAG model that can be adjusted to our needs, changing the tone and creativity of the responses, or providing additional content.

- Handle specific user intents/usage scenarios: Our AI chat could become really helpful if it was able to take action. We could build specific flows that enable users to achieve certain tasks right within the chatbot.

- Sandbox LLM requests and responses: Security is a big topic also in AI. We should introduce filtering both for questions sent to the RAG system as well as answers returned from it, making sure that no malicious requests are sent, or sensitive data is leaked.

- Provision a web service to handle authentication: Sensitive API keys should not be exposed in client applications. Instead, we should handle communication in a dedicated web service that could run in Azure next to our AI Studio project.

Conclusion

RAG is an innovative new approach to providing LLMs with additional information. The combination of Azure AI Studio and React Native allows us to build powerful AI Chat apps that leverage this exciting new technology and possess deep, specialized knowledge. In doing so we can support a wide range of use cases across customer support, marketing, sales, legal, knowledge management and many, many more.