How can AI support app development?

2023 has seen generative artificial intelligence (AI) tools breach further into the mainstream, with widespread interest by workers across a diverse range of industries, from software development to healthcare.

In this article, we will explore the ways in which AI can be leveraged to support software development, as well as discussing the limitations and potential future of these quickly evolving technologies.

AI's rise to power: Will software agencies become obsolete?

As we begin our journey down the rabbit hole of AI-assisted development, it's only natural to wonder if this technology will render application development agencies obsolete. Will we wake up one day to find our beloved developers replaced by an army of AI-driven services, spitting out flawless applications while we sip our morning coffee?

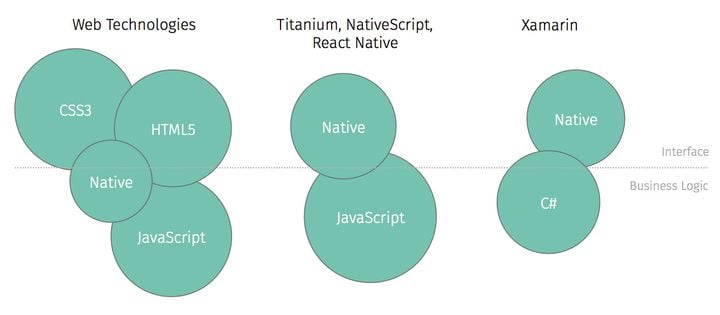

While the idea might be intriguing (or even a little terrifying) the reality is probably less interesting. Although we're seeing the emergence of undoubtedly useful utilities, it's important to remember that these tools are designed to assist with small, specific code-related tasks. Understanding and handling the broader picture of application design and architecture goes beyond their capabilities.

One must also acknowledge that there is a lot more to creating an app than writing code; it requires a deep understanding of the user experience, and the creativity to establish a consistent and innovative product. Additionally, the collaborative process of creating an app cannot be overlooked. Effective communication between technical experts, designers, and the client is an essential part of the process.

These tools may excel at many tasks such as generating snippets of code or suggesting improvements, but these will likely enhance the capabilities of human developers, allowing them additional time to tackle more complex challenges and focus on architecture and innovation.

Your sidekick, not your replacement

In order to effectively incorporate AI into the development process, it is important to understand its current strengths and limitations it has, and to ensure that human oversight is present. Some caveats worth keeping in mind include:

- Trained models are only as good as the data they are trained on.

- The goal of these models is to produce convincing output, not necessarily to be correct.

At a glance, these concerns might not seem so significant, but they can lead to problems if they aren't considered before committing.

You are what you eat: The importance of high-quality training data

One of the biggest advantages of AI tools is their ability to generate code based on patterns learned from the large sets of code it has been trained with.

However, this also means that the quality of the generated code is heavily influenced by the quality of the training data. While the vast variety of data can be a strength, it also means that the code generated might be outdated or of poor quality standards.

AI models will produce output that will reflect the average quality of its training data, which might not always be sufficient. Researchers at New York University assessed how GitHub Copilot output held up when compared to common cybersecurity vulnerabilities and found 40% of the code to be vulnerable. You can find that paper here.

Even if the quality of training data is high, one can still encounter unusable code. It's not unheard of for generative models to produce code based on old or outdated APIs, such as React's outdated class component pattern, or one of React Native's several deprecated modules.

import React from 'react';

// Deprecated ListView module

import { View, ListView } from 'react-native';

// Outdated class component pattern

class MyListView extends React.Component {

constructor(props) {

super(props);

}

...Therefore, it's important for developers to carefully review any code produced by generative tools before incorporating it into their projects.

The fine line between convincing and correct

AI-powered code generators have become quite proficient at generating convincing output. But there's just one problem – the code it produces may not actually be correct. When it comes to AI-generated code, the goal is not always to be correct, but rather to be convincing. In addition to the fact humans have a cognitive tendency to favour recommendations from automated systems it's quite easy to see how issues might arise.

Let's say we have a model that has been trained on a large dataset of JavaScript code, and we give it the task of generating a function that takes in an array of numbers and returns the sum of those numbers. The AI model generates the following code:

function sumArray(arr) {

let sum = "";

for (let i = 0; i < arr.length; i++) {

sum += arr[i];

}

return sum;

}Seems reasonable, no? However, upon closer inspection, we can see an important mistake. Instead of initialising the variable sum as a number, it is mistakenly assigned an empty string. Meaning an array of numbers such as [0, 1, 2, 3] will result in a string concatenation of "01234".

As it stands, the model will present this solution with apparent confidence, despite the inevitably feature-breaking bug. An important part of prompt-driven code generation is being able to identify such issues and pointing them out to the tool.

In this case a quick response of "this solution is incorrect, as it concatenates strings rather than adding numbers" would be a sufficient route to a working solution. This reenforces the importance of not just reviewing generated output, but also understanding how the output operates.

Such limitations can be encountered in even the latest iterations of generative models, so you should be prepared to encounter and correct errors rather than taking every suggestion at face value.

Now, enough with the doom and gloom. Let's look at using this stuff!

GitHub Copilot

Copilot is GitHub's IDE-integrated "AI pair programmer" which has seen a huge increase in adoption over the last year. Since its initial release in 2021 Copilot has seen a swathe of new features which pushes it beyond just a 'code completion tool'.

It is also often on the receiving end of criticism for the quality of its training data (GitHub is the largest collection of source code to ever exist after all) and is also the target of legal disputes over alleged open-source license violations. However, this does not change the fact that, at the time of writing, is probably the most feature-rich integrated AI tool currently available.

Getting started

Configuring Copilot is as easy as installing the Visual Studio Code extension and authorising your subscribed GitHub. You can find details in their official quick-start guide.

Once complete, you can test whether your code suggestions are appearing correctly by typing a function name and awaiting Copilot's output.

These automated suggestions will likely account for the majority of your Copilot experience, but there are a few ways in which you can manipulate these to get more specific output.

Influencing Copilot output

As you can see in our previous example, Copilot is capable of using natural language processing to translate user inputs into code suggestions.

Any text in the current file can be used to inform the code suggestions. This includes comments, which are a great way of informing Copilot of implementation details.

In the example above, everything after the comment on line 1 was produced by either 1) Hitting tab to accept Copilot suggestions, or 2) Hitting enter to get to the next line.

Introducing Copilot Labs

Copilot Labs enhances Copilot with a growing collection of bonus features. Once you install the Visual Studio Code extension you can immediately start exploring these additional prototypes. One of the most exciting of these are Brushes, which gives Copilot the ability to execute a number of assistance actions by manipulating the code directly. Here's an example of Copilot recognising and replacing an incorrect comparator, which causes a bug.

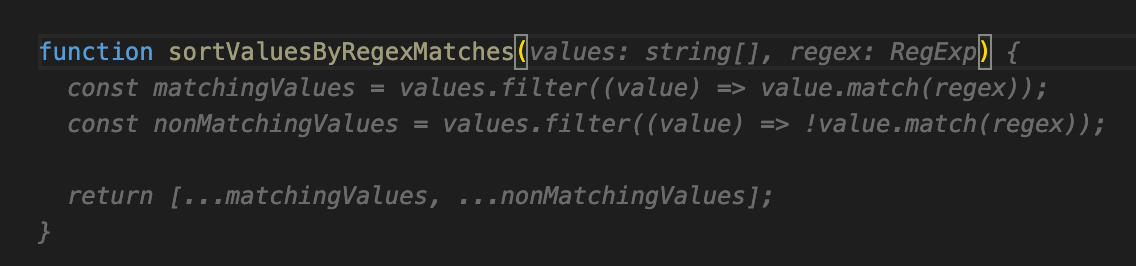

As you can see in the Brushes menu, there are a number of utilities which can directly manipulate code. Here's Copilot adding TypeScript typing to a previously untyped function.

Prompt-based tools

We're beginning to see prompt-based AI tools make the transition from 'trivial parlour trick' to indispensable tools in the development process. The fourth iteration of OpenAI's ChatGPT model has even demonstrated the ability to generate functioning Unity game logic.

If you're unfamiliar with these services, here are some potential scenarios in which these prompt-based tools can provide support.

Code generation

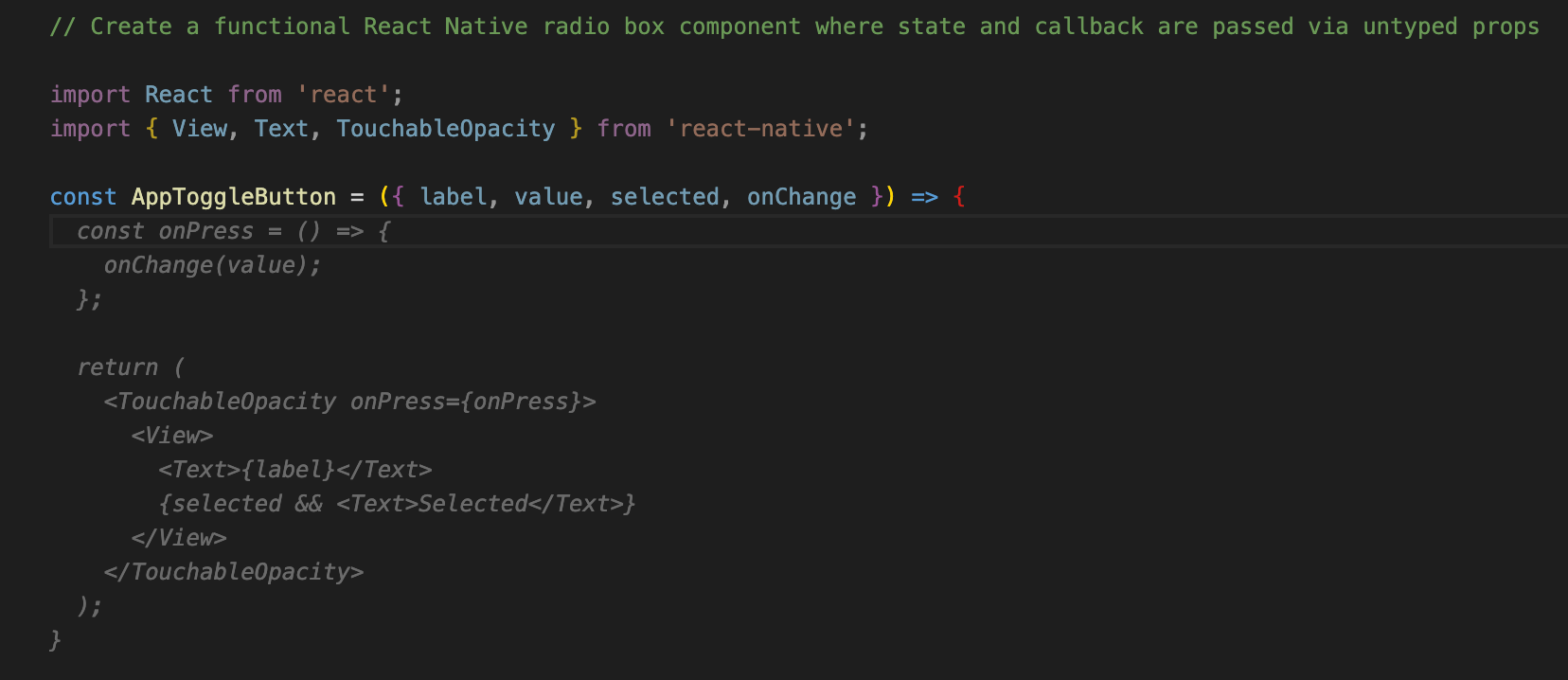

Generating functions and components based on natural language prompts is one of the most common use cases. Instead of spending time searching for examples developers have the option of simply describing their desired functionality.

> Generate a React Native component for a simple button with a primary color and rounded cornersThe default version of ChatGPT 4 has a memory limit of 8,192 tokens, with ChatGPT 3.5 (the version currently available to non-premium users) having half of that. This means that larger, iterative code generation can often fall apart as the model forgets previously recognised implementation details.

Despite that, this tool performs decently in generating initial, boilerplate code for well-known frameworks.

Refactoring assistance

Prompt-based tools are also capable of reading code snippets included in the prompt text. Refactoring code is a common part of software maintenance, and often involves fairly monotonous tasks such as replacing outdated code (e.g. React class components) or including modern APIs (e.g. React hooks).

> Refactor the following React Native class component to a functional component which uses hooks

class Counter extends Component {

constructor(props) {

super(props);

this.state = {

count: 0,

};

}Debugging

Another use for the code analysis capabilities of these tools is identifying and resolving errors, improving the efficiency of debugging sessions, or offering additional options for when you're completely stumped.

> The following JavaScript code is producing the error "TypeError: Cannot read property 'length' of undefined"

function calculateAverage(numbers) {

let sum = 0;

for (let i = 0; i <= numbers.length; i++) {

sum += numbers[i];

}

return sum / numbers.length;

}

The future of AI-assisted development

Given how rapidly these tools are changing it's almost impossible to predict what AI-assisted development could look like even a year from now.

The last few months have seen many well-established products add AI-driven autocomplete as a feature. It wouldn't be wildly speculative to predict this could potentially become standard for many editor tools. An April fools blog post from last month jokingly claimed Xcode 15 would ship with this feature, but in the near future this might not sound so outlandish.

We would expect to see prompt-driven tools become more specialised, catering towards development specific tasks. We're already beginning to see GPT-derived tools such as Phind.com which specifically targets software developers. We might see this trend become more common, with models better suited to more complex development tasks, with better code analysis capabilities. ChatGPT's recently announced plugin support will also likely result in broader development utilities.

One thing that's certain is that existing tool providers are going to continue improving and expanding. Looking over the projects listed on GitHub's 'Next' site it's hard not to notice the list is dominated by Copilot / AI topics. Competing DevOps solution provider Gitlab have also recently thrown their hat in the ring by announcing their generative 'Code Suggestions' beta.

No matter what the future of AI-assisted software development might looks like, you can expect us to continue exploring the topic. Stay tuned!